The race for mass-market self-driving cars is well and truly on.

Mercedes-Benz recently tested an autonomous S-class on public roads and declared it wants to be first to market such a car, tentatively suggesting it would do so this decade. But Nissan has boldly announced plans to sell affordable self-driving cars by 2020, so we took the chance to assess its progress via an autonomous Nissan Leaf prototype.

It’s all part of Nissan’s ‘Blue Citizenship’ social responsibility plan, a trinity of goals consisting of zero emissions (the Leaf is already the world’s best-selling EV by far), near-zero fatalities and mobility for all. It’s the latter aims that autonomous driving will tackle; after all, 93 per cent of the US’s road accidents are caused by human error, and an autonomous car could also grant new independence to those unable to drive.

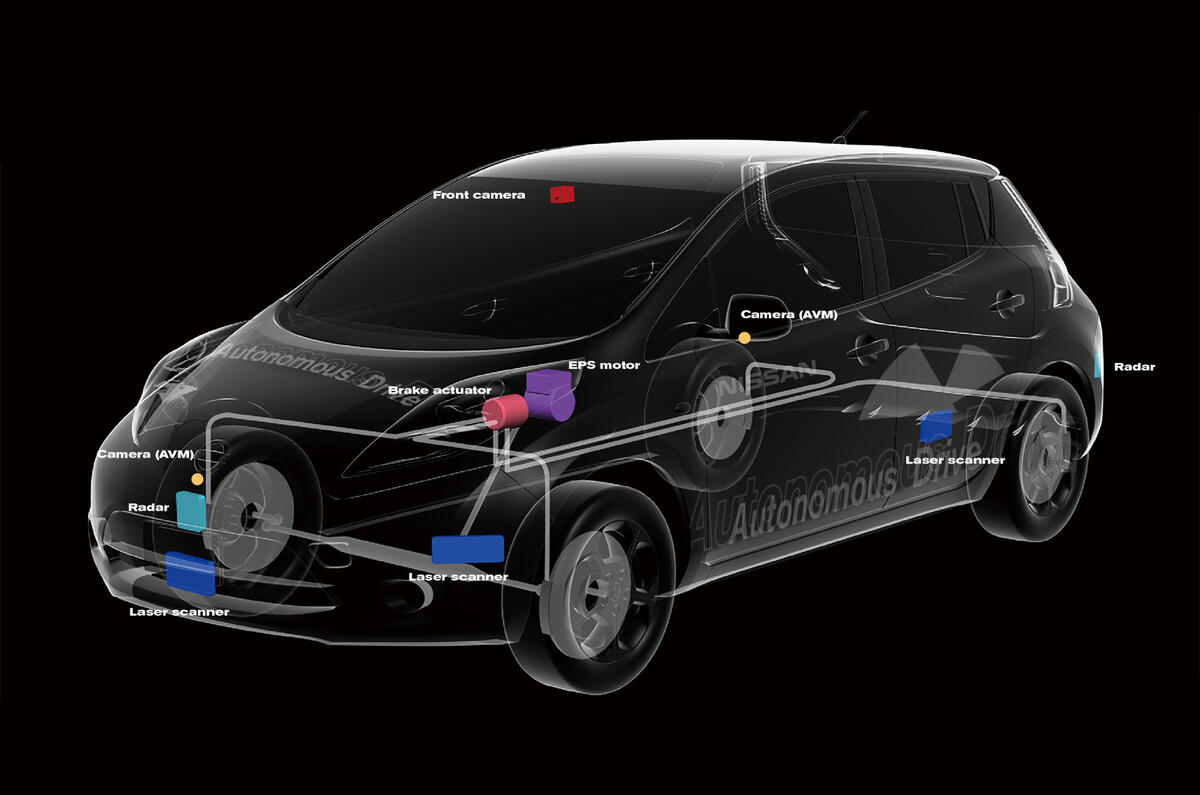

The tech is based on Nissan’s existing ‘Safety Shield’, a radar and camera-based set-up that has featured on 730,000 cars to date, bringing with it features such as lane departure warning, adaptive cruise and all-around camera views. The autonomous Leaf adds continuously scanning lasers — ten times more precise than radar — and a raft of new software algorithms to fuse, process and react to sensor feeds and actuation systems for the steering, accelerator and brakes. (Only the steering wheel is physically actuated; the Leaf’s accelerator and brakes are electronically controlled, so there’s no need for pedal movement, and the controls retain precautionary manual override.)

Autonomous driving is said to be safer simply because machines work more quickly than we do. Our eyes capture fewer frames per second than a high-speed camera, our brain processes data and reacts to it more slowly than a CPU, and our hands and feet are trounced for pace by electronic actuators.

In addition to safety and mobility benefits, autonomous cars would also relieve us of driving’s regular tedium. Why waste your attention edging through town or slogging up the motorway when you could be reading or working? Or, as one demo showed us, why spend your time parking when you could be shopping?

Nissan says the logical progression is for cars that communicate with each other to create optimum traffic flow without stop lights or lanes. Same space, more cars, less congestion. The company’s laser-equipped knee-high robots (‘EPOROs’) preview the possibility by moving together like a school of fish.

Here's the technology which will move autonomous vehicles from the blackboard to the black top.

Self-parking

Once the driver steps out, a ‘valet’ button on the key fob locks the doors and sets the car off to find a space, having recorded the drop-off point by GPS. Using lasers and cameras, the Leaf navigates around cars (both parked and moving) and painted lanes. When a space is identified, the Leaf signals, pulls past it, checks the size of the gap and then reverses in with the help of conventional radar sensors. Shopping done, the owner presses the valet button again and the Leaf navigates its way back to the starting point.

Sensor fusion

Fully equipped, the autonomous Leaf uses four cameras that combine to give a near-360 degree view (the front camera is a hi-res, long-range unit to help read road signs). A front radar sensor reads up to 200m ahead of the car, there is a further radar sensor at each rear corner (whose arrays overlap and extend to 70m), plus there’s the new bit: six laser scanners, positioned front, rear and at each corner. These scanners are the boxy addenda you see on the silver car and have a useful range of 100m. The laser control unit in the rear of the car collates the feeds, then sends signals to the steering actuator, accelerator and brakes.

Join the debate

Add your comment

the end of driving

everyone seems to miss the major implication of autonomous cars - they'll be so much safer the human drivers that manual operation will be banned, it's as simple as that. don't you get it???!

parsley wrote: everyone seems

There is nothing to get, at least not for a very long time.

Taxi drivers worst nightmare.

Taxi drivers worst nightmare.

Great for driving the ex rover blue rinse brigade. It will make the roads 100 times safer.

Car manufacturers...

...need to be careful what they wish for.

Cars are an extension of the human desire for freedom, we choose certain types because they say something about us. The driving experience is part of that, we like the freedom to choose what speed we want to go and which route to take. We clean our cars and spend money on them because they are more than mere possessions.

If the car were to be autonomous, to drive itself, the ex-driver becomes a passenger, where is the desire to own something 'nice' then? I might as well be in a taxi. The car becomes a tool, an appliance. I will then have no desire to be loyal to a certain brand, to seek out car reviews, to be excited about motoring in any way. The car industry as we know it would fail to exist.

Careful what you wish for, you might be getting rid of your livelihood without realising it.