The public’s perspective on which decisions autonomous cars should make in fatal situations is being surveyed by the Massachusetts Institute of Technology (MIT).

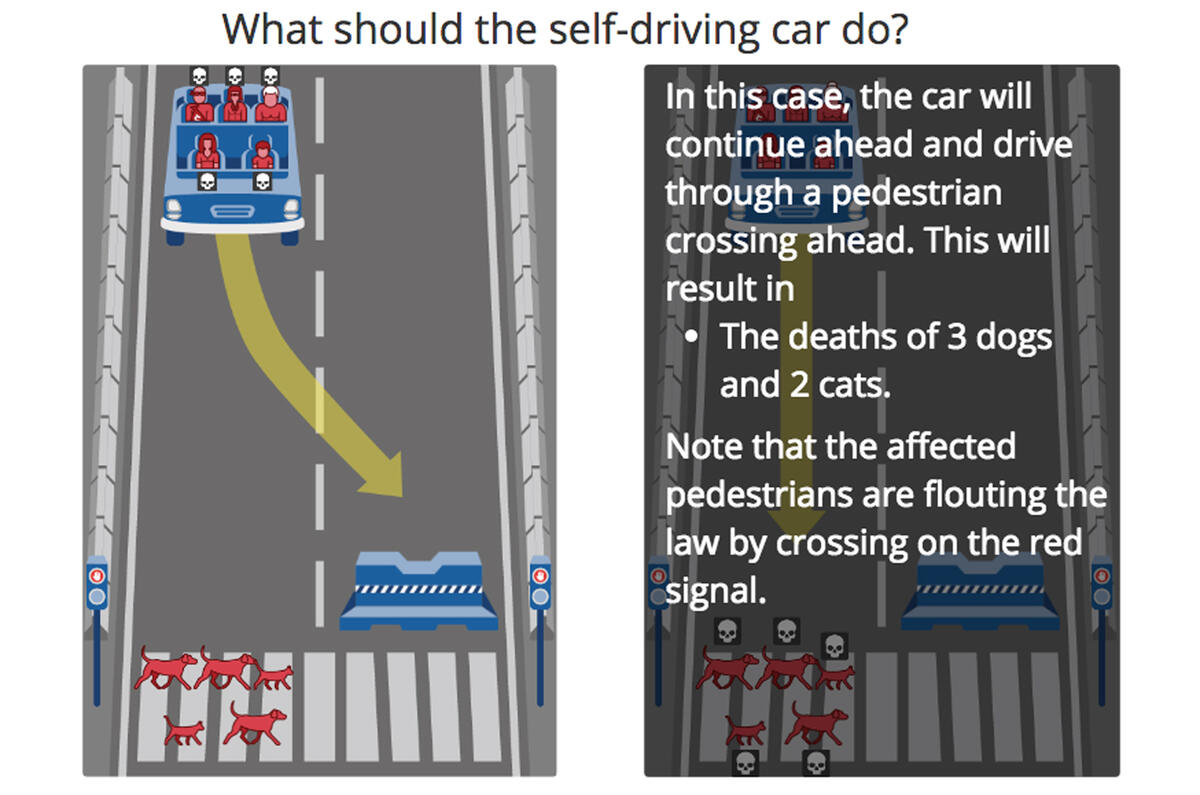

MIT’s ‘Moral Machine’ poses numerous scenarios to the public in which an autonomous vehicle would need to decide who to kill. Respondents are given two choices, and in each, lives must be lost – there is no non-fatal option. To make each scenario and the victims of each clear, a written explanation is provided, in addition to the graphic demonstration.

Individuals’ answers are then compared to the answer patterns to gauge where their answers fit on a series of scales, depending on different circumstances within the scenarios.

For example, the results compare whether the individual favours young people over the elderly, protecting those upholding the law rather than those flouting the rules (for example, if a pedestrian walks into the road when the crossing light indicates not to cross), or protecting passengers in the autonomous vehicle rather than other road users.

Patterns have already appeared in users’ answers, including strong preferences towards saving the lives of younger people, people with ‘higher social value’. In the given examples, a doctor represents someone with high social value and a bank robber has low social value.

Another strong preference, unsurprisingly, was to save human lives, rather than the lives of pets. A near-50/50 split was reached in users’ preference between saving passengers’ lives, or other potential victims’ lives, as well as protecting physically fit people rather than overweight people.

Sahar Danesh, IET principal policy advisor for transport, said: "The machine will always make the decision it has been programmed to make. It won't have started developing ideas without a database to tap into; with this database decisions can then be made by the machine. With so many people's lives at stake, what should the priority be? It's good to test it in this virtual context, then bring in other industries.

Join the debate

Add your comment

Question unanswered: who is to blame?

Always save the passengers

If we program self driving cars to save the pedestrian(s), then what's to stop malicious pedestrians from purposely walking out in front of a self driving car in order to murder the passengers of the car and get away with it.

All they have to say is.... I didn't see the car coming. And there would be no way to prove otherwise.

I know some may think it's unethical, but the truth is... people are unpredictable and malicious, and unless we come up with a way for the car to read the thoughts of the pestrian it's about to hit, the car should always choose to protect those who we know are faultless... the passengers.

If we tell self driving cars to protect pedestrians, we are just giving criminals an easy way to commit murder and get away with it.

Lake District!